Federated Learning

When I watch Google I/O Extend 2019, I heard a word called "Federated Learning". So I'm eager to know what is this term. Then I thought to tell about it for all of my friends. Here I'll try to give you a little idea of what federated learning is. I have given you a reference for more information on federated learning. 👉 visit this

Okay, let's continue our topic😉

What is federated learning?

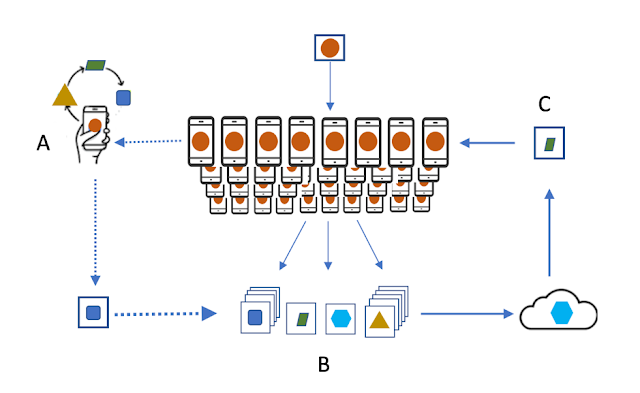

It is a way to keep your data on your own devices. This is the best solution for increasing communication efficiency. Federated Learning is a machine learning setting where the goal is to train a higher quality centralized model with training data distributed over a large number of clients each with an unreliable and relatively slow network connection.

This is a method with an algorithmic solution that allows everyone to build machine learning models and keep the data at its source. So then everyone can share those models with the servers in the data centers and can improve privacy and reduce communication costs.

Imagine;

But the owners of these parties may not agree to share their data with the data centers. Because of privacy concerns or bandwidth challenges.

But this Federated allows everyone to build a model while keeping the data at its source. When doing the federated learning each device or entity trains their own model locally and it's that model everyone can share their data with the servers in the data centers.

The Servers combines the model into a single federated model. And it never has direct access to the training data.

In this way, it helps to preserve privacy and reduce communication costs.

Abstract;

Federated Learning is a machine learning setting where the goal is to train a higher quality centralized model with training data distributed over a large number of clients each with an unreliable and relatively slow network connection. We consider learning algorithms for this setting where on each round, each client independently computes an update to the current model based on its local data and communicates this update to a central server, where the client-side updates are aggregated to compute a new global model. The typical clients in this setting are mobile phones, and communication efficiency is of utmost importance. In this paper, we propose two ways to reduce uplink communication costs. The proposed methods are evaluated on the application of training a deep neural network to perform image classification. Our best approach reduces the upload communication required to train a reasonable model by two orders of magnitude.[Ai.google. (2019). [online] Available at: https://ai.google/research/pubs/pub45648 [Accessed 10 May 2019].]

Thank You!!!

sanduniisa.

Stay Tuned With Me😊

Good job!!!

ReplyDeleteThank You Warushika❤

DeleteWorth reading ❤️

ReplyDeleteThank you Ifaya❤

DeleteWorth reading ❤️

ReplyDelete